AI agents are the topic du jour because of reported productivity gains, despite concerns about accuracy and security. According to the 2025 Stack Overflow Developer Survey, 87% of developers are concerned about the accuracy of information coming from AI agents, and 81% worry about the privacy and security of data when using them. The cost of AI agent platforms is also a barrier for 53% of the survey.

Despite these concerns, 23% of the study already utilize AI agents in their work at least weekly, with another 8% using them infrequently. An additional 17% are planning to use AI agents, which are autonomous software entities that operate with minimal to no direct human intervention.

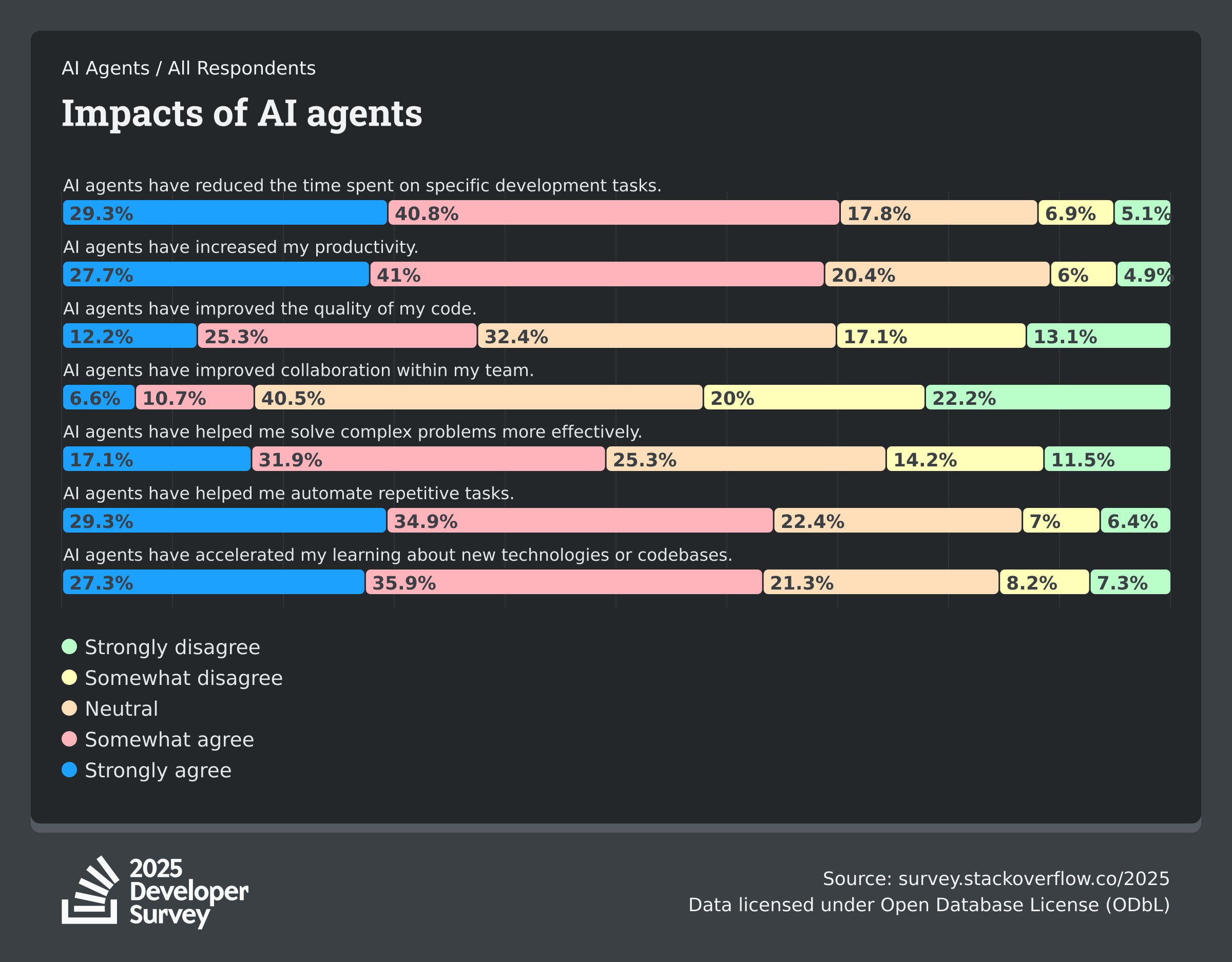

AI agents have gained users because 70% of developers who have used one believe it has reduced the time spent on specific development tasks. A similar number (69%) at least somewhat agree that they’ve made productivity gains.

There are two areas where AI agents have been less impactful. Only 17% believe agents have improved team collaboration, and only 36% feel that they have improved the quality of code.

Another interesting AI-related takeaway is that only 15% are doing vibe coding as part of their professional development work.

People who said they use AI agents were also asked, “To what extent do you agree with the following statements regarding AI agents?” Their responses are in this article’s featured image.

Taking a broader view of AI, we found that 78% of the survey respondents use AI tools in the development process, which is up from 62% in last year’s study. One of the biggest problems facing these tools is that 46% of developers don’t trust the accuracy of the output of AI tools. Still, 59% are favorably inclined towards using AI tools as part of their development workflow, compared to 21% with an unfavorable view. We don’t know how many of those who look negatively at AI tools have actually used them.

Overall, 66% of developers say that a problem or frustration they have when using AI tools is when “AI solutions that are almost right, but not quite.” That’s why other studies have found that developers are spending more time on code review, testing and debugging. In this study, 45% say that debugging AI-generated code is more time-consuming.

AI Agents Technology Roadmap

Developers who have used AI agents in the past year were asked about the tools and infrastructure they have used with AI agents. Here is a quick breakdown of the findings:

- Redis (43%) and GitHub MCP Server (43%) were the most used technologies for AI agency memory or data management. Supabase (21%), ChromaDB (20%), pgvector (18%), Neo4j (12%), and Pinecone (11%) have attracted a significant number of users.

- Ollama (51%) and LangChain (33%) were most likely to be used for agent orchestration or agent frameworks. Other technologies at the top of the adoption chart are LangGraph (16%), Vertex AI (15%), Amazon Bedrock Agents (15%), OpenRouter (13%), and Llama Index (13%).

- Cited by 13%, Langsmith was the only purpose-built AI agent observability, monitoring or security solution to have gained significant traction. The leaders in this field are from vendors that deal with all developer-related observability and security issues, but have since added AI-related functionality too: Grafana + Prometheus (43%), Sentry (32%), Snyk (18%), and New Relic (13%).

- ChatGPT (82%), GitHub Copilot (68%), Google Gemini (47%) and Claud Code (41%) are the most used out-of-the-box agents/copilots/assistants. Lower on the list are v0.dev (9%), Bolt.new (7%), Lovable.dev (6%), and AgentGPT, Tabnine, Replit and Auto-GPT (each used by 5%).

The Stack Overflow study has been conducted for the last 15 years. This year’s version is based on over 49,000 respondents, with much of the global sample being similar to previous iterations of this annual survey.

The complete article can be found here.